2026 and beyond

I did a podcast episode in mid-January talking about what I think is coming for agents and AI in 2026 and beyond. This blog is basically the written version of that, covering what I discussed there, plus a few things I didn’t get to mention. So if you’ve already listened, consider this as some warp up and extended cut; if you haven’t, please go and check that out~

This is my attempt at opening up the next few years - what I expect to see, mainly around agents. I’ve done some reasoning on what will keep happening this year and beyond, and I also took a sneak peek into the farther future. I know I’ve done this kind of thing several times before, but I think it’s still worth redoing since AI is evolving so fast. Things look pretty different from even a year ago.

2026

For 2026, I think one of the main topics will still be agents, but they will be more personalized and truly useful agents.

Proactive agent

The whole journey has evolved like this: first we had chatbots, only used for conversations. Then people wanted them to access external data, so we got basic tool use - searching the web, finding live data. As models became more powerful, they could handle more tools and become more robust. Now, we gave them reasoning capabilities, and they became agents.

Expectations are growing. People want agents to do more personalized things, to be more useful, to know more about them. But the limitation is clear: current agents only do tasks explicitly requested by humans. They lack self-initiating ability. We say agents will help us take actions and save time, but the reality is that current agents, whether text-based, GUI-based, or combined, are slow. After you send a request like “shop some groceries for me”, you need to wait a while. That’s counter-intuitive to how we want them to save us from tedious daily work.

To improve this, agents should really be able to do tasks on its own; in another words, they have to understand how we use them, and then use that understanding to do tasks in the background without waiting for humans to initiate and intervene. They need to prepare what we want in advance. This makes me think of them as a more advanced form of autocomplete. The only difference is the scale of the task. Ordinary autocomplete, like Cursor’s Tab mode, handles lines of code across files, while task-based agents, like Manus, handle entire tasks.

To get there, they need to learn your usage patterns. For example: the agent knows you always ask it to summarize your email on Monday morning, so it automatically does that in the future; or it knows when you’re running low on groceries and handles that for you.

But we also need to make sure they’re not annoying, which means timing also matters - it shouldn’t be too intrusive or too hidden. Otherwise it’s useless. This means the UI and UX need to change. We can’t just have input-box-only interfaces anymore. Gmail’s AI Inbox from last week is a good example: it didn’t fundamentally change how you interact with Gmail, but it added AI features that actually boost productivity. AI-powered tools don’t necessarily need an obvious input box; they should be bound to the task context itself.

If this develops well, it will significantly boost people’s productivity with agents, and people will start believing in them more.

Memory

The second key piece is memory. People are giving models higher expectations, and models need to know users better to feel genuinely useful. This ties back directly to what I mentioned about proactive agents.

Currently there are a few general solutions for memory. Looking at it from a product perspective, there are basically three types:

Model uses a tool to store things into a memory space (ChatGPT, Gemini, Claude, Kimi, Qwen, etc.)

Model uses a conversation search tool to find specific topics from past chats (Claude, ChatGPT)

System summarizes user interactions daily, then extracts new information into detailed summarized memory (Claude)

These are pretty good, and I’ve seen promising performance from products like Claude with their memory system. But for broader general agents, it can, and should, be better. Memory isn’t just limited to basic information about us, it’s connected to our general preferences across life: shopping style, coding style, travel style, and a lot more. These affect how well an agent can complete tasks within your expectations. But it’s annoying to repeatedly mention or re-state preferences. So how products “form” these memories needs innovation too.

One approach I’ve been thinking about: hand off your apps and websites to an agent to explore first. An agent will always be better at learning your preferences than asking you to describe them, and you definitely don’t want to repeat yourself over and over; so you give the agent login access, it browses and checks though your previous orders, learns and summarizes your preferences, i.e. what do you usually order for groceries? which airline do you always prefer? The agent then summarizes these into specialized documentation. Each time it goes to that specific app or website, the relevant instructions load automatically, ensuring the model already knows what it needs to know. This doesn’t require any special model capability - it just needs the product or environment (”agent harness”) to be optimized to push what the model knows further.

The same approach would apply to many other use cases. And unlike recent skills or similar features, this doesn’t require user or model to pay extra attention. There won’t be cases where the model ignores specific preferences, because they’re loaded by default.

The above are all product-based, but we could also think from a more foundational side. Sometimes models don’t realize the importance of using user knowledge, so they just skip it. (I should note: my ideas here may be wrong since there’s no clear experimentation showing these work yet.)

We could rely on SAEs (sparse autoencoders) from mechanistic interpretability. Anthropic has used these in some of their research. Generally, SAEs can find activated feature points inside a model when it’s generating specific tokens. If we could use this technique to detect a model’s tendency to seek external knowledge, including user memories, then when that tendency is high, we could auto-inject relevant knowledge after that token. The model receives it and generates more useful responses.

We could use fewer, more specialized experts in MoE models. For example, a model with only three or four experts, each for a specific action: one for thinking/reasoning, another for tool use, and a final one for responding. Maybe one more for orchestrating which expert to use at each step.

There could be more innovation in memory on the model side.

Either way, we’re going to see a lot of surprises around proactive agents and memory. The key question now is how models can really boost productivity - because I think that’s where they can bring the most economic impact before they can truly impact society at large, they should first have huge, noticeable impact on individuals.

Trends

Also, I think there would be some continuing trends that will keep happening or starting to shift in next year or two.

Model as a product

The first continuing trend is model as a product. This has been a long-running pattern; and I think it has two slightly different side:

Model having unique abilities that can directly become a new product or feature (like GPT-Image, Nano Banana, Sora 2, Genie 3, etc.)

Model having is quite strong that people can build geneneral products around it with some engineering work (like early Manus on Claude-3.7 Sonnet, Claude Code, etc.)

Among these, I think the new Genie 3, Google’s latest world model, we got in public last week has huge potential. You can create the world, control how you “walk around” inside it - the whole thing is customizable. It’s going to be much more fun than video models like Sora. And since it can generate interactive worlds, it has potential to become one of the first reliable generative games. I didn’t play many games before, but if we get solid products based on robust world models, I might start - creating my own experiences sounds really fun lol. Some examples I saw on X:

Agent capacity

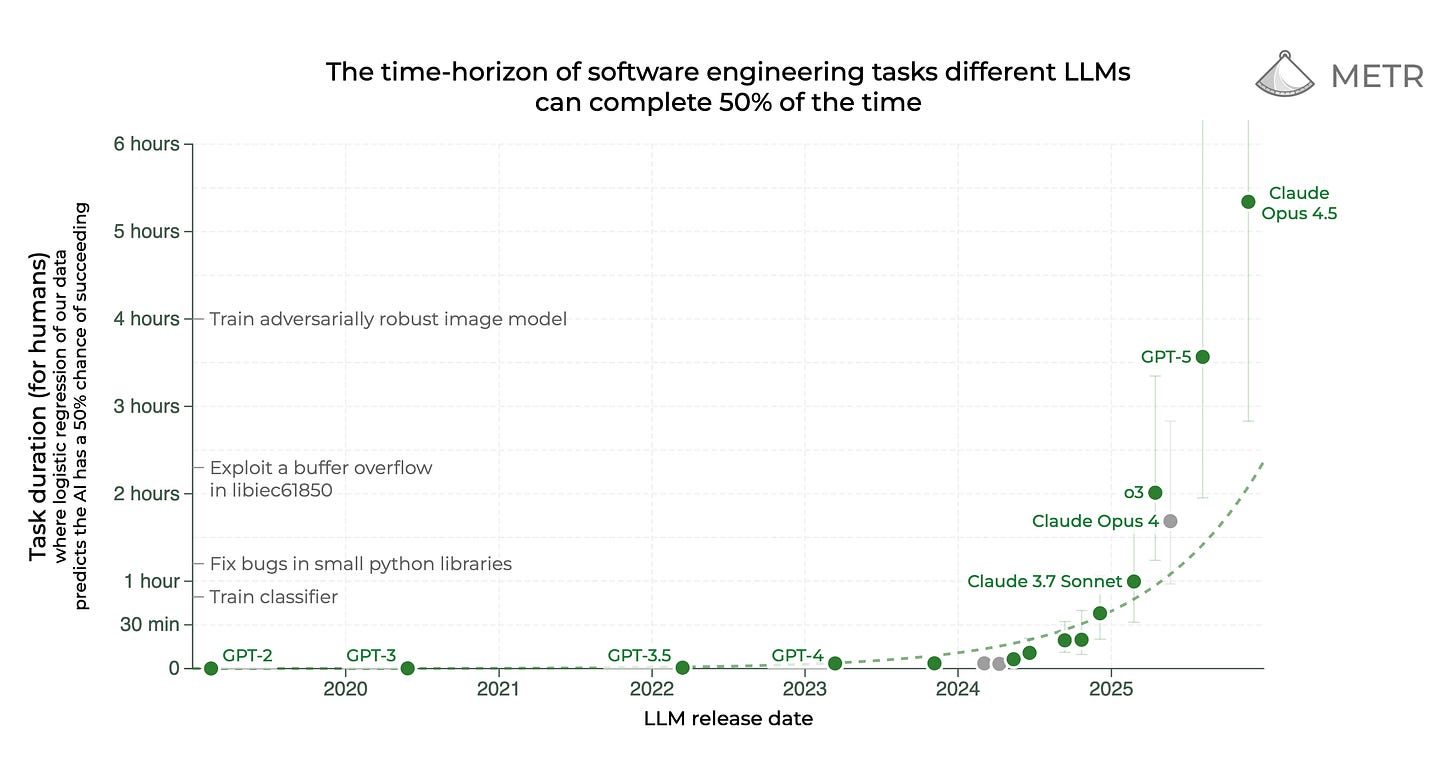

The second trend is agent capacity. Models will become more robust, that’s the clear trajectory. They’ll handle more long-tail tasks, able to do even complex tasks. They would help humans more in accelerating not just SWE work but also AI research itself; maybe even automate some of it. We’ve already seen huge potential here, both in scientific research and other areas. And we have benchmarks tracking this, like METR Time Horizo, VendingBench, and many more.

The curve is going up and will continue pretty steadily.

Model alignment

The third, and one of the most important, is model alignment. As models become more capable and people put them into more production environments, the consequences of bad intentions become catastrophic. If a model is able to help scientist in building nuclear fusion reactor, then it can help bad people build nuclear weapons; if a model is able to help companies develop madicine, then it can create bio-weapons as well; knowledge are connected anyway. I had wrote about my thoughts on this before, and there’re a lot of researches on this, but one approach I find promising is the new Claude Constitution. OpenAI’s Model Spec is similar, but more rule-based: what you should do, what you shouldn’t. The Constitution is more about teaching the model how to be good and do good things - less like rules, more like parents teaching a child (I remember Dario described it as a letter “from a deceased parent sealed until adulthood”). I think this is a promising direction, and I expect more companies to explore this kind of approach.

Human-AI interaction

The last thing is how human-AI interaction will change. Right now, we interact with AI through apps, APIs, websites - all limited to mobile and desktop. I think a really good new portal is AI glasses, because they can see what you see, hear what you hear. And they can have their own ecosystem position - they don’t need to replace phones or anything else. They can add something new: a different way to interact and co-live with AI; unlike Humane AI Pin or Rabbit r1, which tried to replace the phone and couldn’t.

Since AI glasses can sense almost everything we can, they’d be a great add-on for the proactive agents I mentioned. They could recommend things or help complete tasks based on your real-world environment. Better memory systems become important here too.

We’re already seeing some products. For example, Pickle 1 looks kinda promising - I’ve already pre-ordered, waiting to see how it goes. And it seems Google is working on something too, mentioned by Demis at Davos 2026. But these are still early.

We can focus on the previous parts for now; the glasses stuff is more a matter of hardware, software, and ecosystem catching up.

Future

I’ve written about the future many times before, but AI moves so fast that things look quite different from even a year ago. So I think it’s still worth sharing what I expect for the farther future - I have some new thoughts after seeing recent posts, interviews, and doing my own thinking.

Before I go further, I should mention Dario’s new essay, The Adolescence of Technology. It’s a serious piece that maps out the risks we’re facing and how we might address them. I have a lot of respect for how he approaches these problems - careful, concrete, not as a doomer. If you haven’t read it, I’d recommend it. What I’m writing here is more of a personal take, from someone who will live through this transition; and again, it’s all my personal thoughts, so can be incorrect.

What I want to see

The world on the other side: one where survival anxiety isn’t the default mode of human life. Where scientific progress in medicine, climate, and longevity happens way faster than before. Where people can pursue what actually matters to them, not just what pays bills.

Dario calls this “Machines of Loving Grace”. I think he’s right about what’s possible. The real question is whether we can get through the middle part without everything falling apart.

I’ve imagined this good future a lot. Robots handling physical labor. Abundance making material scarcity less relevant. People free from the constant pressure of “making a living” and able to actually live. It sounds utopian, but I don’t think it’s impossible - just hard to get to, and need a lot of efforts.

Some hard questions

If AI creates more value than you, what’s your purpose?

This will be the lived experience of a lot of people soon. Companies will do the math: AI is faster, cheaper, better. The rational move is to let people go. If that happens at scale, the whole “AI benefits humanity” thing falls apart. You can’t really benefit from something that made you economically irrelevant and gave you nothing back.

I think to prevent this, companies and society need some kind of common understanding: even if AI creates more value, we should still preserve humans in the foreseeable future. After a company takes what they need for operations, they should return that value to the workers who got replaced, which should be more like a social contract. The value came from somewhere.

This is really hard to execute. No enforcement mechanism, no clear policy, and competitive pressure pushes against it. But that’s why the journey is difficult. The tech is arriving faster than our social systems can adapt; that’s why I said, it should be us to adapt the development of theses advanced systems. We’ve almost never seen these together, and our existing frameworks aren’t built for it.

Meaning without work

Even if we solve the material side - even if displaced workers get income - there’s still the meaning problem. People don’t just want stuff. They want to matter, to be needed. Work used to provide that, even when the work itself was boring.

I’ve thought about this a lot, and there are a lot of discussions out there. In a world where AI handles most cognitive tasks, we’ll need new structures for purpose. Creative work, community, exploration, caregiving - things that matter to us even if they don’t maximize GDP. But this won’t happen automatically, we have to build it intentionally.

Maybe this sounds abstract, but it’s actually pretty concrete. What would you do if you didn’t have to work? Not vacation-mode “what would you do”, but actually, long-term, what would give your life structure and meaning? For me, I think it’s exploring unknowns, experiencing different places, maybe creating things. But a lot of people haven’t had the chance to even think about that question, because survival comes first.

The transition will force us to answer it, and I think the answer will be different for everyone, which is kinda the point. Freedom to figure out what matters to you, rather than having it dictated by economic necessity.

The transition itself

I think it’s quite obvious that this transition won’t be peaceful. I’ve said before that millions will lose jobs, and society might break down in parts. That’s what history told us, the industrial revolution caused massive suffering before things got better. This could be similar, but faster and broader.

The question is whether we can make the transition as humane as possible. Not “acceptable sacrifice for progress” - that framing has been used to justify a lot of harm historically. More like: we acknowledge it will be hard, and we try to take care of each other through it.

Why I’m still optimistic

I know the risks are huge. I’ve read a lot of doomer takes, and I get where they’re coming from. Powerful AI in the wrong hands, misaligned objectives, societal collapse, and a lot more; these are indeed real.

But there are a lot of researchers working on alignment and interpretability. Some companies (like Anthropic and DeepMind) actually taking safety and relevant problems seriously. The new Claude Constitution trying to teach models to be good, not just follow rules. People having these conversations instead of ignoring them. That matters.

I’ve thought about how to hold both things - the hopeful vision and knowing that getting there will be rough. Honestly, it comes down to something simple: I believe our world can be much better, and I want to see that happen. Maybe help build it. That’s the faith I keep.

The years ahead will be hard, maybe it will take us decades. But I keep coming back to: so what? why be afraid?

There’s still more to write, but I think this is enough for now, I’ll save the rest for a future post.

Anyway, I wish the world getting better and better in 2026 and beyond.